|

Cryptome DVDs are offered by Cryptome. Donate $25 for two DVDs of the Cryptome 12-years collection of 46,000 files from June 1996 to June 2008 (~6.7 GB). Click Paypal or mail check/MO made out to John Young, 251 West 89th Street, New York, NY 10024. The collection includes all files of cryptome.org, jya.com, cartome.org, eyeball-series.org and iraq-kill-maim.org, and 23,000 (updated) pages of counter-intelligence dossiers declassified by the US Army Information and Security Command, dating from 1945 to 1985.The DVDs will be sent anywhere worldwide without extra cost. | |||

8 May 1998

High-performance Computing, National Security Applications,

and

Export Control Policy at the Close of the 20th Century

66

Building on the Basics: An Examination of High-Performance Computing Export Control Policy in the 1990s established upper-bound applications as one of the three legs supporting the HPC export control policy [1]. For the policy to be viable, there must be applications of national security interest that require the use of the highest performance computers. Without such applications, there would be no need for HPC export controls. Such applications are known as ' upper-bound applications." Just as the lower bound for export control purposes is determined by the performance of the highest level of uncontrollable technology, the upper bound is determined by the lowest performance necessary to carry out applications of national security interest. This chapter examines such applications and evaluates their need for high performance computing to determine an upper bound.

Key application findings:

- The demand for memory often drives applications to parallel platforms.

- The Message Passing Interface (MPI), a package of library calls that facilitates writing parallel applications, is supporting the development of applications that span a wide range of machine capabilities.

Upper bound applications exist

The Department of Defense (DoD) has identified 10 Computational Technology Areas (CTA), listed in Table 10. Through the High Performance Computing Modernization Program (HPCMP), DoD has continued to provide funding for the acquisition and operation of computer centers equipped with the highest performance computers to pursue applications in these areas. Not all of these areas, however, serve as sources for upper-bound applications. Not all applications within the CTA's satisfy the criteria of this study. Some are almost exclusively of

67

civilian concern and others require computing resources well below the threshold of controllability.

| CFD | Computational Fluid Dynamics | CEN | Computational Electronics and Nanoelectronics |

| CSM | Computational Structural Mechanics |

CCM | Computational Chemistry and Materials Science |

| CWO | Climate/Weather/Ocean Modeling and Simulation |

FMS | Forces Modeling and Simulation/ C4I |

| EQM | Environmental Quality Modeling and Simulation |

IMT | Integrated Modeling and Test Environments |

| CEA | Computational Electromagnetics and Acoustics |

SIP | Signal/Image Processing |

Table 10: DoD Computational Technology Areas (CTA)

There are applications that are being performed outside DoD that do not directly fall under any of the CTA's. These applications include those in the nuclear, cryptographic, and intelligence areas. These areas are also covered in this study.

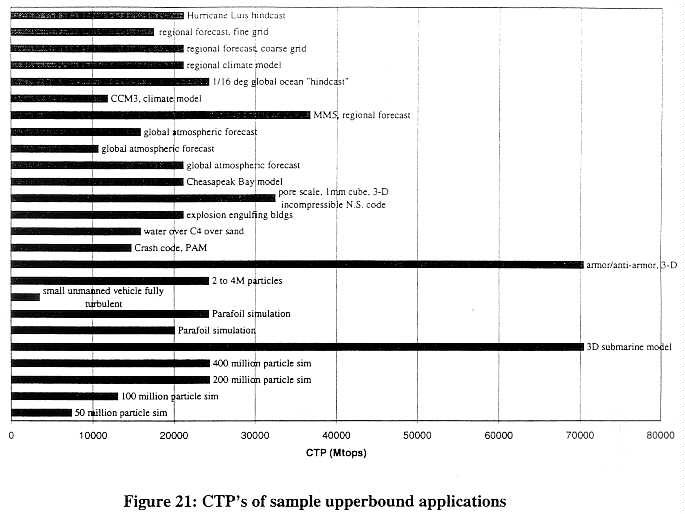

There remains a strong core of applications of national security concern, requiring high levels of computing performance. Figure 21 shows a sampling of specific applications examined for this study. As detailed in later sections of this chapter, these applications continue to demand computing performance well above the current export limits, and in most cases will remain well above the lower bound of controllability for the foreseeable future.

68

Role of Computational Science

Computational science is playing an increasingly important role in research and development in almost every area of inquiry. Where danger exists in the development and testing of prototypes, simulation can reduce the danger. Simulation can support the investigation of phenomena that cannot be observed. Simulation reduces costs by reducing the number of different prototypes that need to be built. Finally, simulation increases the effectiveness of a testing program while reducing the number of actual tests needed.

In some areas, computer simulation is already a tightly integrated part of the development and testing cycle. In other areas, simulation techniques are still being developed and tested. In all areas, simulation techniques will see continued improvement, as better computer hardware becomes available. This will allow experiments that are not possible today due to constraints of memory and/or processors. Better hardware will also support parametric studies, that is, allow the researcher to run a suite of tests varying one or more of the parameters on each run.

69

In some areas. high performance computing directly supports operational needs. Weather forecasting is a clear example of this. Improved hardware leads directly to more accurate global forecasts. Better global forecasts lead to more accurate regional forecasts. The number of regional forecasts that can be developed within operational time constraints is directly dependent on the computing resources available.

Demand for greater resources

Computational science continues to demand greater computing resources. There are three driving factors behind this quest for more computing performance. First, increasing the accuracy, or resolution, of an application generally allows more detail to become apparent in the results. This detail serves as further validation of the simulation results and provides further insight into the process that the simulation is modeling. Second, increasing the scale of a simulation allows a larger problem to be solved. Examples would be increasing the number of molecules involved in a molecular dynamics experiment or studying a full-size submarine complete with all control surfaces traveling in shallow water. Larger problems thus become a path into understanding mechanisms that previously could only be studied through actual experiments, if at all. Finally, moving to models with greater fidelity. Examples here include using full physics rather than reduced physics in nuclear applications and solving the full Navier-Stokes equations in fluid dynamics problems.

All three--increased resolution, increased scale, and improved fidelity--offer the possibility of a quantum leap in understanding a problem domain. Examples include studies of the dynamics among atoms and molecules of an explosive material as the explosive shock wave proceeds, or eddies that form around mid-ocean currents. Ideally, a good simulation matches the results of actual observation, and provides more insight into the problem than experiment alone.

Increased resolution and scale generally require a greater than linear growth in computational resources. For example, a doubling of the resolution requires more than a doubling of computer resources. In a three-dimensional simulation, doubling the resolution requires a doubling of computation in each of three dimensions, a factor of eight times the original. And this example understates the true situation, as a doubling of the resolution often requires changes in the time scale of the computation resulting in further required increases in computing resources.

Memory demands are often the motivation behind a move from a traditional vector platform to a massively parallel platform. The MPP platforms offer an aggregate memory size that grows with the number of processors available. There are currently MPP machines that have one gigabyte of memory per node, with node counts above 200.2 The attraction of such large memory sizes is encouraging researchers to make the effort required (and it is considerable) to move to the parallel world.

_______________________

2 Aeronautical Systems Center (ASC), a DoD Major Shared Resource Center located at Wright-Patterson AFB has a 256 node IBM SP-2 with one gigabyte of memory per node, for a total of 256 gigabytes.

70

Transition from parallel-vector to massively-parallel platforms

Scientific applications in all computational areas are in transition from parallel vector platforms (PVP) to massively parallel platforms (MPP). Hardware developments and the demands--both price and performance--of the commercial marketplace, are driving this transition. These trends have already been discussed in detail in chapter 2. Computational scientists in some application areas made early moves to MPP platforms to gain resources unavailable in the PVP platforms--either main memory space or more processors. However, all areas of computational science are now faced with the need to transition to MPP platforms.

The development of platform independent message-passing systems, such as PVM [2] and p4 [3], supported the first wave of ports from traditional PVP machines to MPP machines. The platform independence helped assure the scientists that their work would survive the vagaries of a marketplace that has seen the demise of a number of MPP manufacturers. The development of the Message Passing Interface [4] standard in 1994 and its wide support among computer manufacturers has accelerated the move to MPP platforms by scientists.

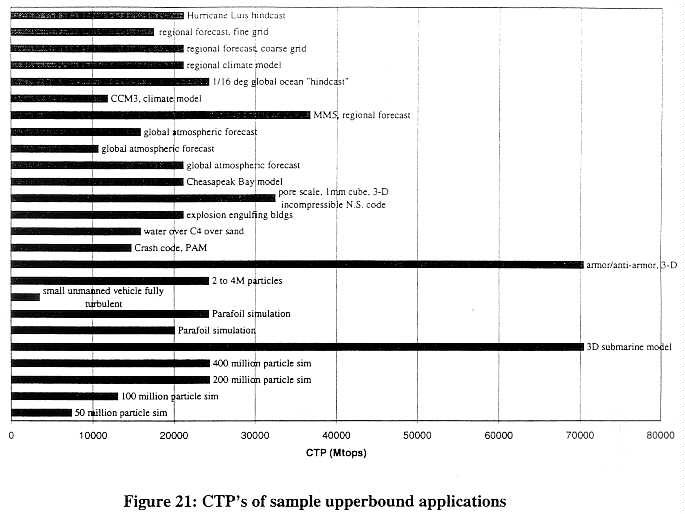

The move to MPI has resulted in applications that can run effectively on a broad range of computing platforms and problem sizes, in contrast to PVP codes that rely heavily on vector operations for efficient execution. Figure 22 illustrates the transitions possible. From a code optimized for vector operations, it is not easy to obtain a code that runs well on a desktop workstation, or any strictly parallel machine. However, once the effort has been made to port the PVP code to the MPP platform using MPI, it is an easy transition to run the same code on smaller

71

or larger MPP systems by simply specifying the number of processors to use. Since all the computer manufacturers provide support for MPI, the code is now portable across an even wider variety of machines.

The smaller systems generally cannot run the same problems as the larger systems due to memory and/or time constraints. However, being able to run the code on smaller systems is a great boon to development. Scientists are able to test their code on the smaller, more accessible, systems. This shortens the development cycle and makes it possible for researchers without direct access to the larger platforms to be involved in the development and verification of new algorithms.

Parallel coding starting to "come naturally"

Production codes are now entering their second generation on parallel platforms in some application areas. Examples include work in the nuclear area, ocean modeling, and particle dynamics. A common characteristic in many of these applications is a very high memory requirement. Starting roughly with the Thinking Machines CM2, parallel machines began offering greater memory sizes than were available on vector machines. This offered the opportunity to run simulations if the researcher was willing to put in the effort to port the code. Now, those researchers are entering second and even third generation revisions of their codes, and have production as well as R & D experience with parallel machines. Thus, there is a growing cadre of researchers for whom parallel codes have become the ordinary way of working.

Parallel platforms used for production work

Large parallel platforms are being used for production work, as well as for research and development. In general, this use is in application areas that were among the earliest to make the move from the vector processors. Examples include nuclear simulations, particle simulations, cryptographic work, and ocean modeling.

Memory size and bandwidth remain bottlenecks.

Many application areas are moving to parallel platforms primarily to gain the increased aggregate memory sizes available on these systems. The memory to CPU bandwidth then becomes a problem on the commodity RISC-based processors used on the parallel systems. Many problems become sensitive to the size of the cache and often require work to optimize the cache performance.

The other side of this argument, however, is the increased memory sizes available on standard workstations. Problems are now being executed on these workstations that were on supercomputer-class systems a few years ago. These problems were often run as single-CPU jobs on the vector machines, as the primary requirement was the memory size. On workstations, they can require long execution times and often require large disk space as well. However, given the wait time for sufficient resources on high-end computers, it can turn out to be quicker to use a

72

workstation. Where multiple workstations are available, parametric studies can be run simultaneously.

Computing Platforms and Scientific Applications

This section provides a brief discussion of key factors that affect the performance of an application on a parallel computer. A great deal of detail has been omitted to make the discussion accessible. Details that affect specific application areas are covered later in this chapter.

Determinants of performance

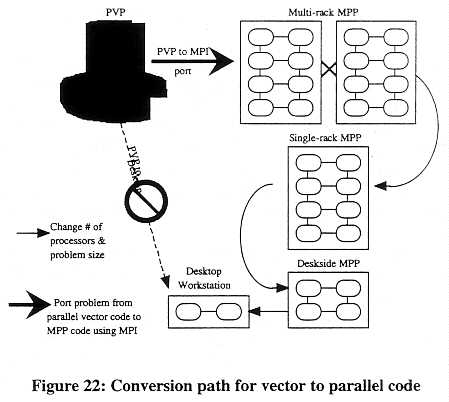

Most scientific applications make use of grids to subdivide the problem space. Grids can be unstructured or structured. Unstructured grids are typically triangular for 2-D problems and tetrahedral for 3-D problems. Finite element (FE) solution techniques are used with unstructured grids. Structured grids are "logically" Cartesian or rectangular in form. Finite difference (FD) or finite volume (FV) solution techniques are used with structured grids. Figure 23 gives some two dimensional examples of solution grids.

In parallel processing, the grids are divided in some fashion across two or more processes, a step known as "domain decomposition." Each process performs the computations on its portion of the grid. At various times during the computation, it is normally necessary for the processes to share data from their portion of the grid with the processes handling the adjacent portions of the grid. When executing on a parallel machine that has multiple processors, each process is typically assigned to one processor. This spreads the computational work over as many processors as are available.

During execution of an application that is spread across multiple processors, each processor will, generally speaking, be in one of three states at a given time:

73

The total execution time (T) of a given processor is the sum of the times spent in each of these three states:

T = Tcomp + Tcomm + Tidle

Tcomp is a function of the number of processes executing on the processor. For each process, Tcomp is further dependent on the grid size, the number of time steps, the number and types of operations executed in each time step, and the time needed to execute an operation. More complex (and realistic) performance models take into account differences in the time needed to execute an operation depending on where data are located (e.g. main memory or secondary storage).

Tcomm is the amount of time the processor spends on exchanging data with other processors. This will be platform dependent, and is dependent on the architecture--shared memory vs. distributed memory.

Tidle is the time a processor spends waiting for data. Generally, this will be while waiting for another processor to complete a task and send the needed data, or merely waiting for requested data to arrive at the CPU.

If execution time is measured from the instant the first processor begins until the last processor ceases activity, then the total execution time for each processor will be the same, although the percentage of time spent in each state is likely to vary.

If only compute time directly advances the state of the computation and the amount of computation necessary for the execution of a particular model and problem size is relatively constant, then performance can best be improved by minimizing the time the processors spend communicating or being idle. The communication requirements strongly depend on the domain decomposition used--how a problem is divided across the multiple processes and how the processes are divided across the machine' s available processors. Different decompositions of the same problem onto the same set of processors can have widely different communication patterns. One goal of problem decomposition is to maximize t'ne amount of computation performed relative to the amount of communication.

Applications and the algorithms they use can differ considerably in how easy it is to find decompositions with good computation-to-communication ratios. So-called "embarrassingly parallel" applications are those that lend themselves to decompositions in which a great deal of computation can be performed with very little communication between processors. In such cases, the execution of one task depends very little, or not at all, on data values generated by other tasks. Often, applications with this characteristic involve solving the same problem repeatedly, with different and independent data sets, or solving a number of different problems concurrently.

74

Shared memory multiprocessor systems.

One broad category of multiprocessors is the shared-memory multi-processor, where all the processors share a common memory. The shared memory allows the processors to read and write from commonly accessible memory locations. Systems in this category include symmetrical multiprocessors (SMP) such as the Silicon Graphics Challenge and PowerChallenge systems, Sun Microsystems' Enterprise 10000, and many others. Cray's traditional vector-pipelined multiprocessors can also be considered part of this broad category, since each processor has equal access to shared main memory.

In the abstract, shared memory systems have no communication costs per se. Accessing the data values of neighboring grid points involves merely reading the appropriate memory locations. Most shared-memory systems built to date have been designed to provide uniform access time to main memory, regardless of memory address, or which processor is making the request.

In practice, the picture is more complex. First, processors must coordinate their actions. A processor cannot access a memory location whose content is determined by another processor until this second processor has completed the calculation and written the new value. Such synchronization points are established by the application. The application must balance the workload of each processor so they arrive at the synchronization points together, or nearly so. Time spent waiting at a synchronization point is time spent not advancing the computation.

Second, most of today's shared-memory machines include caches as part of the memory design. Caches are very fast memory stores of modest size located at or near each processor. They hold data and instruction values that have been recently accessed or, ideally, are likely to be accessed in the immediate future. Caches are included in system design to improve performance by reducing the time needed to access instructions and their operands. They are effective because in many cases the instructions and operands used in the immediate future have a high probability of being those most recently used.3 Caches necessarily involve maintaining duplicate copies of data elements. Maintaining the consistency of all copies among the various processors' caches and main memory is a great system design challenge. Fortunately, managing cache memory is not (explicitly) a concern of the programmer.

The existence of cache memory means that memory access is not uniform. Applications or algorithms with high degrees of data locality (a great deal of computation is done per data element and/or data elements are used exclusively by a single processor) make good use of cache memory. Applications which involve a great deal of sharing of data elements between processors, or little computation per data element are not likely to use cache as effectively and, consequently, have lower overall performance for a given number of computations.

___________________

3 For example, a typical loop involves executing the same operations over and over. After the first iteration, the loop instructions are resident in the cache for subsequent iterations. The data values needed for subsequent iterations are often those located in memory very close to the values used on the first iteration. Modern cache designs include fetch algorithms that will collect data values "near" those specifically collected. Thus, the data for several iterations may be loaded into the cache on the first iteration.

75

Third, when the processors in a shared-memory multiprocessor access memory, they ma~ interfere with each other's efforts. Memory contention can arise in two areas: contention for memory ports and contention for the communications medium linking shared memory with the processors. Memory may be designed to give simultaneous access to a fixed and limited number of requests. Usually, separate requests that involve separate banks of memory and separate memory ports can be serviced simultaneously. If, however, two requests contend for the same port simultaneously, one request must be delayed while the other is being serviced.

A shared communications medium between memory and processors can also be a bottleneck to system performance. internal busses, such as those used in many SMP systems can become saturated when many processors are trying to move data to or from memory simultaneously. Under such circumstances, adding processors to a system may not increase aggregate performance. This is one of the main reasons why maximum configuration SMP's are rarely purchased and used effectively.

Some systems use switched interconnects rather than a shared medium between processors and memory. While more expensive, switched interconnects have the advantage that processors need not contend with each other for access. Contention for memory may still be a problem.

Distributed memory multiprocessor systems.

Distributed memory machines can overcome the problems of scale that shared memory systems suffer. By placing memory with each processor, then providing a means for the processors to communicate over a communications network, very large processor counts can be achieved. The total amount of memory can also be quite large. In principle, there are no limits to the number of processors or the total memory, other than the cost of constructing the system. The SGI Origin2000, Cray T3E, and IBM RS/6000 SP are examples of distributed memory architectures that are closely coupled. Clusters of workstations are distributed memory machines that are loosely coupled.

For distributed memory machines, the communication time, Tcomp, is the amount of time a process spends sending messages to other processes. As with shared memory machines, two processes that need to share data may be on the same or different processors. For a given communication (sending or receiving a message), communication time breaks into two components: latency and transmission time. Latency time--in which various kinds of connection establishment or context switching are performed--is usually independent of the message size. It is dependent in varying degrees on the distance between processors and the communication channel. Transmission time is a function of the communication bandwidth - the amount of data that can be sent each second--and the volume of data in a message. The distinction between closely coupled and loosely coupled machines lies primarily in the latency costs, as explained in chapter 2.

Even for applications that are not embarrassingly parallel, the ratio of computation to communication can sometimes be improved by increasing the granularity of the problem, or the portion of the problem handled by a single processor. T'his phenomenon is known as the surface-to-volume effect. As an illustration, suppose that a problem involved a two-dimensional grid of

76

data points. Updating the value at each point involves obtaining the values of each of the four neighboring points. If a different processor is used to update each grid point. then for one computation, four communications are required to obtain the neighbor's data values. If, however. each processor were responsible for updating an 8 x 8 = 64 grid-point portion of the grid, 64 computations would be needed (one for each grid point), but only 8 x 4 = 32 communications. No communication is involved in accessing data from a neighboring grid point when the data values are stored in the memory of the same processor. Communication occurs only for fetching the data values of grid points lying outside the perimeter of the 64-point grid, since these are located in the memory of other processors. In a two-dimensional grid, the amount of computation is proportional to the number of points in a subgrid (the volume), while the amount of communication is proportional only to the number of points on the perimeter (the surface). Further, the messages often can be batched along an edge. Following the same example, the one processor can request the 8 values for one side of its grid in a single message, and can send its own 8 values on the same edge in one message. The amount of data sent will be the same, requiring the same bandwidth; however, the latency will be reduced since the start-up cost needs to be paid for only one message, not eight.

While increasing the granularity for a fixed-sized problem improves the computation-to-communications ratio, it also reduces the amount of parallelism brought to bear. (The extreme case would be putting the entire problem on a single processor: excellent computation-to-communications ratio, but no parallelism.) For a fixed number of processors, however, the computation-to-communication ratio can be improved by increasing the size of the problem and, correspondingly, the number of grid points handled by a single processor. This assumes, however, that the processor's memory is large enough to hold the data for all the points; the paging overhead associated with virtual memory is likely to decrease performance dramatically.

Numerical Weather Forecasting

Introduction

Weather conditions have always played a vital role in a host of military and civilian activities. The ability to predict in advance the weather conditions in a particular area can provide a substantial competitive advantage to those planning and executing military operations. For this reason, in spite of the obviously beneficial civilian applications, weather forecasting is considered an application of national security significance.

Since the dawn of the computer age, the most advanced computing systems have been brought to bear on computationally demanding weather forecasting problems. Historically, weather forecasters have been among the leading adopters of new supercomputing technology. While this tradition continues to the present, weather forecasters have been challenged by the broadening base of computing platforms that, in principle, can be used in weather forecasting. Besides the well-established vector-pipeline processors, practitioners have other options, including massively parallel and symmetrical multiprocessor systems.

77

Numerical weather forecasting was identified in Building on the Basics as a crucial upper-bound application. This section examines the computational nature of numerical weather forecasting in much greater detail than did the previous study, with the goal of understanding to what degree such applications can be usefully executed on a variety of system architectures, principally vector-pipeline and massively parallel processors. Ultimately, the goal is to understand the degree to which forecasting of a nature useful to military operations can be performed on the uncontrollable computer technology of today and tomorrow.

This section starts by examining the relationship between the computational nature of the application and the major high-performance computer architectures currently available. The next subsection includes a discussion of empirical results obtained from leading forecasting centers in the United States and Europe regarding the use of various platforms to run their atmospheric models. Then we discuss global and regional ocean forecasts and their similarities and differences from atmospheric models. Finally, we conclude with a brief discussion of future trends in numerical weather forecasting and computational platforms, and alternative sources of competitive advantage for U.S. practitioners.

The Computational Nature of Numerical Weather Forecasting

The state of the weather at any given point in time can be defined by describing the atmospheric conditions at each point in the atmosphere. These conditions can be characterized by several variables, including temperature, pressure, density, velocity (in each of three dimensions). Over time, these conditions change. How they change is represented mathematically by a set of partial differential and other equations that describe the fluid dynamic behavior of the atmosphere and other physical processes in effect. The fluid dynamics equations include:

conservation of momentum

conservation of water substance4

conservation of mass

conservation of energy

state (pressure) equation

Equations for physical processes describe such phenomena as radiation, cloud formation, convection, and precipitation. Depending on the scale of the model, additional equations describing localized turbulence (e.g. severe storms) may be used.

Collectively, these equations constitute an atmospheric model. In principle, if the state of the weather at a given time is known, the state of the weather at a future time can be determined by performing a time integration of the atmospheric model based on these initial conditions.

The basic equations and the atmosphere itself are continuous. Like many numerical models of physical phenomena, however, the atmospheric models approximate the actual conditions by

___________________

4 The conservation of water substance equations govern the properties of water in various states: vapor, cloud, rain, hail, snow.

78

using a collection of discrete grid points to represent the atmospheric conditions at a finite number of locations in the atmosphere. For each grid point, values are stored for each of the relevant variables (e.g. temperature, pressure, etc.).

Future weather conditions are determined by applying discrete versions of the model equations repeatedly to the grid points. Each application of the equations updates the variables for each grid point and advances the model one "time step" into the future. If, for example, a single time step were 120 seconds (2 minutes), a 24-hour forecast would require 24 hours/2 minutes = 720 applications (iterations) of the model's equations to each grid point.5

The fluid dynamics of the atmosphere is typically modeled using either a finite difference method, or a spectral transform method. With the finite difference methods, variables at a given grid point are updated with values computed by applying some function to the variables of neighboring grid points. During each time step, the values at each grid point are updated, and the new values are exchanged with neighboring grid points.

The spectral transform methods are global spherical harmonic transforms and offer good resolution for a given amount of computational effort, particularly when a spherical geometry (like the earth) is involved.6 The drawback, however, is that the amount of computation necessary in spectral methods grows more quickly than in finite difference methods as the resolution increases. Practitioners are divided on which method is better.

Numerical weather prediction models carl also vary in the scale of the model, the length of the forecast, the physical phenomena to be modeled, and the degree of resolution desired. The models vary in the number of grid points used (and, in the case of spectral methods, the number of waves considered in the harmonics),7 the number of time-steps taken, and the equations employed.

Current models used in military weather forecasting can be classified as global, mesoscale (regional)8, theater, or battlespace (storm-scale). The domain for these models ranges from the entire planet (Global) to a 1000 x 1000 km region (battlespace). For each of these models, weather can be forecast for a variety of time periods into the future. Typical forecasts today are from I2 hours to 10 days.

_____________________

5 The Naval models described below use time steps of 440s for global models, 120s for regional models, and smaller time steps for each under certain circumstances, such as when there are high atmospheric winds, or when higher resolution is needed for localized conditions. Other models may use different time steps.

6 The basic grid point mesh has a rectangular solid geometry. The spherical geometry of the earth means that if the same number of grid points represents each latitude, the geographic distance between these points is less as you approach the North or South Pole. Such considerations introduce complexities into the finite difference algorithms.

7 The resolution of models using spectral techniques is usually indicated by the convention Tn, where n is the number of harmonics considered. A model designated T42 has a lower resolution than one designated T170. The NOGAPS (Global) model used by Fleet Numerical is designated T159.

8 Mesoscale models typically model a domain with a scale of a few thousand kilometers. Such models are particularly useful for forecasting severe weather events, simulating the regional transport of air pollutants, etc. Mesoscale models are regional models, but the latter term is also used to describe extracts of global models.

79

Models can also be classified as hydrostatic, or non-hydrostatic. Hydrostatic models are designed for use on weather systems in which the horizontal length scale is much greater than the vertical length scale. They assume that accelerations in the vertical direction are small, and consequently are not suitable for grid spacing below 5-10 km. Non-hydrostatic models. on the other hand, do not make this assumption and are often employed in systems that model, for example, thunderstorms or other phenomena on this scale. Current military models that emphasize global and regional forecasts are hydrostatic, but work is being done to develop non-hydrostatic models as well.

The resolution of the model depends on both the physical distance represented by two adjacent grid points, and the length of the time step. A model in which grid points represent physical coordinates 20 km apart will generally give more precise results than one in which grid points offer a resolution of only 100 km. Similarly, shorter time steps are likely to yield better results than longer ones. The greater the resolution, the greater the fidelity of the model. For example, certain kinds of turbulence may be modeled with grid points at 1 km, but not 80 km, resolution. At the same time, however, increasing the number of grid points or decreasing the time step increases the amount of storage and computation needed.

The choice of grid size, resolution, and timestep involves balancing the type of forecast desired against the computational resources available and the time constraints of the forecast. To provide useful predictions, a model must run sufficiently fast that the results are useful (e.g. a 24-hour forecast that takes 24 hours to compute is of no use.) For this reason, there are clear limits to the size of the model that can be run on a given platform in a given time window. On the other hand, there are points beyond which greater resolution is not needed, where practitioners feel a particular model size is sufficient for a desired forecast. For example, the "ultimate" domain size necessary for storm-scale prediction is a 1024 x 1024 x 32 grid [5].

Military Numerical Weather Forecasting

Military Numerical Weather Forecasting Models

Table 11 shows some of the models used by the Fleet Numerical Meteorology and Oceanography Center for military numerical weather prediction, as of the end of 1995 [6]. The global models are currently their principle products. The right-hand column indicates the elapsed time needed to run the model.

80

| Type | Definition | Resolution | Wall Time |

| Global Analysis | MVOI9 | Horizontal: 80 km Vertical: 15 levels |

10 min/run |

| Global Forecast | NOGAPS10 3.4 | Horizontal: 80 km Vertical: 18 levels |

30 min/forecast day |

| Regional 9000 km x 9000 km |

NOGAPS Extracts |

||

| Theater 7000 km x 7000 km |

NORAPS11 6.1 relocatable areas |

Horizontal: 45 km Vertical: 36 levels |

35 min/area |

| Mesoscale 3500 km x 3500 km |

NORAPS 6.1 2 areas

NORAPS 6.1 |

Horizontal: 20 km Vertical: 36levels

Horizontal: 15 km |

60 min/area 75 min/area |

Table 11. Weather prediction models used by Fleet Numerical MOC.

To understand the significance of the wall time figures, it is important to understand the real-time constraints of a center like Fleet Numerical. Weather forecasting is not a one-time computation. Fleet Numerical's many customers require a steady stream of forecasts of varying duration. Given a finite number of CPU hours per day, the CPU time used to generate one forecast may take time away from another. For example, if a global forecast were to take 45 rather than 30 minutes per forecast day, one forecast might start encroaching on the time allocated for the next forecast. Either the number of forecasts, or the duration of each, would have to be reduced. When weather forecasters increase the resolution or the grid size of a particular model but use the same hardware, one forecast run can start cutting into the next run. For example, when the mesoscale model was run to support NATO operations in Bosnia, improving resolution from 20km to 15km doubled the run time. Better resolution would be possible computationally, but impossible operationally on the current hardware. Operational considerations place strong constraints on the types of models that can be run with the available hardware.

_________________

9 Multi-variate Optimum Interpolation. Used to provide the initial conditions for both the global and regional models (NOGAPS and NORAPS), but at different resolutions.

10 Navy Operational Global Atmospheric Prediction System.

11 Navy Operational Regional Atmospheric Prediction System.

81

The Traditional Environment for Military Numerical Weather Forecasting

The current state of military numerical weather forecasting in the United States is a result of a number of current and historical circumstances that may or may not characterize other countries weather forecasting efforts.

In summary, Fleet Numerical operates on very demanding problems in a very demanding environment and is, for good reasons, rather conservative in its use of high-performance computing. The Center is not likely to dramatically change the models, code, or hardware/software platforms it uses until proven alternatives have been developed. Fleet Numerical practitioners make it clear that current models and codes do not (yet) run well on distributed-memory, or non-vector-pipelined systems.12 This is only partly a function of the nature of numerical weather forecasting (discussed in the next section). It is as much, or more, a function of the development and operational environments at Fleet Numerical and the Naval

___________________

12 The Cray vector-pipelined machines reportedly provide a sustained processing rate of 40% of the peak performance on Fleet Numerical codes. For example, the 8-processor C90 has a peak performance of 7.6 Gflops and provides just over 3 Gflops of sustained performance.

82

Research Labs that develop the supporting models. Since the current generation of software tools is inadequate for porting 'dusty deck, legacy" Cray code to massively parallel platforms, the human effort of porting the models would be comparable to developing new models from scratch Furthermore, there is, as described below, little guarantee that the end result would be superior to current practice. The risk to Fleet Numerical operations of moving to dramatically new models and platforms prematurely is considerable. Consequently, those interested in understanding the suitability of non-traditional hardware/software platforms for (military) numerical weather forecasting should consider as well the experience of practitioners at other meteorological centers, who might be less constrained by operational realities and more eager to pioneer innovative approaches.

Computing Platforms and Numerical Weather Forecasting

This section examines the computational characteristics of numerical weather forecasting, with an emphasis on the problems of moving from vector platforms to massively parallel platforms. The first half of the section examines the problem in general. The second half explores a number of specific weather codes and the platform(s) they execute on.

Numerical Weather Forecasting and Parallel Processing

Like many models describing physical phenomena, atmospheric models are highly parallel; however, they are not embarrassingly so. When a model is run, most of the equations are applied in the same way to each of the grid points. In principle, a different processor could be tasked to each grid point. During each time step, each processor would execute the same operations, but on different data. If computation done at one grid point were independent of computation done at another, each processor could proceed without regard for the work being done by another processor. The application would be considered embarrassingly parallel and nearly linear improvements in performance could be gained as the number of processors increases.

However, computation done at one grid point is not, in fact, independent of that done at another. The dependencies between grid points (described below) may require communications between processors as they exchange grid point values. The cost in CPU cycles of carrying out this sharing depends a great deal on the architecture and implementation of the hardware and systems software.

There are three kinds of data dependencies between grid points that complicate the parallel processing of weather forecasting models. First, at the end of each time step, each grid point must exchange values with neighboring grid points. This involves very local communications. How local depends on the algorithm. In higher-order finite difference schemes, the data needed to update a single grid point' s variables are drawn not just from the nearest neighboring grid points, but also from grid points that are two or more grid points away. The amount of communications is subject to the surface/volume phenomenon described earlier.

The spectral methods, an alternative to the finite difference methods, often involve transforms (between physical, Fourier, and spectral space) that have non-local communications patterns.

83

Second, global operations, such as computing the total mass of the atmosphere. are executed periodically. Good parallel algorithms exist for such computations.

Third, there are physical processes (e.g. precipitation, radiation, or the creation of a cloud) which are simulated using numerical methods that draw on data values from a number of different grid points. The communications costs can be considerable. The total physics component of an atmospheric model can involve two dozen transfers or more of data per grid point per time step. However, the grid points involved in such a simulation usually lie along the same vertical column. Assigning grid points to processors such that all grid points in the same vertical column are assigned to the same processor typically reduces communications costs.

In many models, a so-called semi-Lagrangian transport (SLT) method is used to update the moisture field at grid points. This method computes the moisture content at a grid point (the "arrival point") by tracking the trajectory of a particle arriving at this grid point back to its point of origin in the current time step ("departure point"). The moisture field of the departure point is used in updating the moisture field at the arrival point. This computation can involve significant communications overhead if different processors are handling the arrival and departure points.

Finally, overall performance of a model is strongly affected by load-balancing issues related to the physics processes. For example, radiation computations are performed only for grid points that are in sunlight [7]. The computational load can be distributed more evenly among processors, but usually at the cost of great communications overhead.

Performance of Computing Platforms: Empirical Results

Since the first weather forecasting models were run on the ENIAC, meteorologists have consistently been among the first practitioners to make effective use of new generations of supercomputers. The IBM 360/195, the Cray 1, the Cyber 205, the Cray YMP and Cray C90 have all been important machines for weather forecasting [8]. In 1967, weather codes were adapted for the SOLOMON II parallel computer, but the amount of effort devoted to adapting weather models and algorithms to parallel systems, both shared-memory and distributed memory, has grown dramatically only in the 1990s, as parallel systems have entered the mainstream of high-performance computing.

Meteorologists at leading research institutes and centers throughout the world have done a great deal of work in adapting weather codes for parallel platforms and obtaining empirical results. These efforts include:

84

The weather and climate forecasting problem domain currently embraces a significant number of different models, algorithms, implementations, and hardware platforms that make it difficult to provide highly detailed performance comparisons. At the same time, the general conclusions are remarkably consistent:

Ocean Forecasts

Global ocean forecasts have two purposes. The first is to predict the ocean currents. An ocean current has eddies and temperature variations that occur within and around the current flow. The temperature differences become important in submarine and anti-submarine warfare. The side that knows where the current is and is not can both hide its submarines more effectively and improve the chances of detecting other submarines. Ocean currents also become important in

85

ship routing decisions, both for lowering fuel costs and shortening transit times. This latter application of ocean modeling has both civilian and military uses.

The second purpose of an ocean forecast is to provide the sea surface temperature input used as one of the major inputs to the atmospheric forecasts. An ocean model that can provide more accurate surface temperatures will enable an atmospheric forecast with improved accuracy. To date, however, attempts to directly couple the two models to provide this input have not been successful. It is believed this is primarily due to the ocean models using a coarser resolution than the atmospheric forecasts.

Ocean models take as their input the winds, sea state information (especially wave height), and sea surface temperature [16]. An atmospheric forecast can provide heat, moisture, and surface fluxing as inputs to the ocean model. The heat input determines sea surface temperature and the surface fluxing affects wave creation. Wind information can be taken in part from observation, but primarily comes from the atmospheric forecast. The ocean model requires averaging information about the winds; that is, the ocean model needs the average of the winds over a period of days rather than the specific wind speeds at a particular instant. Current sea surface temperature and wave height information is also obtained from satellite data. The wave height information is used in part to determine average wind speeds. The satellite data comes from the GEOSAT series of satellites from the U.S. Both Japan and France have similar satellites. Altimeter equipped satellites can provide additional data about the currents. For example, a cross-section of the Gulf Stream will show variations in ocean height of up to a meter as you move across the current. Current satellite altimeters can measure differences in height of a few centimeters.

Ocean models are complicated by the need to accurately predict the behavior below the surface, yet there is very little data about the current state of the ocean below the surface. A11 of the observation-based data is about the surface or near surface. Thus, a good climatological model is essential to get the deep ocean correct. Statistical inference is used to move from the surface to the deep ocean based on ocean models. There are now about 15 years worth of ocean model data to draw upon.

Ocean models are characterized by the size of the cells used to horizontally divide the ocean. Thus, a 1/4 degree ocean model uses cells that are 1/4 degree longitude by 1/4 degree latitude on each side, yielding a resolution of about 25 km on a side. This is the current model used at Fleet Numerical for their daily ocean current forecasts and run on their 12 processor C90. This model simply examines the first 50 m of water depth, looking at temperature variations due to wind stirring and heat at the surface. This is a global model covering all the world's oceans except the Arctic Ocean. Regional current models are used for finer granularity in areas of particular interest, such as smaller seas, gulfs, and coastal areas. These models run at various finer resolutions that depend on the nature of the region covered and its operational importance. The global model serves as the principle input to the regional models.

This operational global ocean model is too coarse to provide useful current information. In addition, it does not provide sufficient resolution to be useful as input to the atmospheric model.

86

The limitation is one of time--Fleet Numerical allocates one hour of C90 time for each ocean forecast. A secondary limitation of the C90 is insufficient memory

Researchers at the Naval Oceanographic Office (NAVO) at the Stennis Space Center are developing more accurate models. In part, these are awaiting procurement decisions at Fleet Numerical However, even these models will not be able to produce sufficient accuracy within the one hour time constraint to model the ocean currents.

A 1/32 degree (3 km resolution) ocean model is the goal This will provide good coverage and detail in the open ocean, and provide much better input to the regional and coastal models. This goal is still some years out. The current roadmap for Fleet Numerical with regard to ocean current models is shown in Table 12.

| Date | Cell Resolution |

Coverage | |

| present | 1/4 degree | 25 km | global |

| 2001 | 1/8 degree

1/16 degree 1/32 degree |

12-13 km

7 km 3 km |

global

Pacific Atlantic |

| -2007 | 1/32 | 3 km | global |

Table 12: Global ocean model projected deployment [16]

The vertical resolution in these models is six vertical layers. The size of a vertical layer depends on the density of the water, not its depth. Using fixed-height layers would increase the vertical requirement to 30 or more layers to gain the same results. The depth of the same density will change by several hundreds of meters as the grid crosses a current or eddy.

Researchers at NAVO are working with Fortran and MPI. The code gains portability by using MPI. In what has become a standard technique across disciplines, the communication code is concentrated within a few routines. This makes it possible to optimize the code for a particular platform by easily substituting the calls for a proprietary communication library, such as Cray's SHMEM.

It takes five to six years to move a model from an R & D tool to a production tool. In addition to the time needed to develop the improved models, the model must be verified. The verification stage requires leading edge computing and significant chunks of time. The model is verified using "hindcasts"--executions of the model using historic input data and comparing the output with known currents from that time frame. For example, NAVO tested a 1/16 degree (7 km) global ocean model in May-June 1997 on a 256-node T3E-900 (91,000 Mtops). It required 132,000 node hours, about 590 wall clock hours.13 Such tests not only establish the validity of the new model, they improve the previous model. By comparing the results of two models at

___________________

13 224-nodes were used as compute nodes. The remaining 32 nodes were used for I/O.

87

different resolutions, researchers are often able to make improvements on the lower resolution model. Thus, the research not only works toward new production models for the future, but also improves today's production codes.

Ocean models made the transition to MPP platforms much earlier than the atmospheric models. "Oceanographic codes, though less likely to have operational time constraints, are notorious for requiring huge amounts of both memory and processor time" [17]. Starting with the Thinking Machines CM-5, ocean codes on MPP platforms were able to outperform the Cray PVP machines. For example, the performance of an ocean model on a 256-node CM-5 ( 10,457 Mtops) was equivalent to that achieved on a 16-node C90 (21,125 Mtops) [16]. Ocean codes are also more intrinsically scalable than atmospheric codes. There is not as much need to communicate values during execution, and it is easier to overlap the communication and computation parts of the code. Sawdey et al [17], for example, have developed a two-tier code, SC-MICOM, that uses concurrent threads and shared memory within an SMP box. When multiple SMP boxes are available, a thread on each box handles communication to the other SMP boxes. Each box computes a rectangular subset of the overall grid. The cells on the outside edges of the rectangular subset are computed first, then handed to the communication thread. While the communication is in progress, the remaining cells inside the rectangular subset are computed. This code has been tested on an SGI Power Challenge Array using four machines with four CPUs each ( 1686 Mtops each), and on a 32-node Origin2000 ( 11,768 Mtops) where each pair of processors was treated as an SMP.

The dominant machine in ocean modeling today is the Cray T3E. It is allowing a doubling of the resolution over earlier machines. Several systems are installed around the world with 200+ nodes for weather work, including a 696-node T3E-900 (228,476 Mtops) at the United Kingdom Meteorological Office. These systems are allowing research (and some production work) on ocean models to advance to higher resolutions.

Modeling coastal waters and littoral seas

Modeling ocean currents and wave patterns along coasts and within seas and bays is an application area with both civilian and military interests. These include:

88

The military time constraints are hard These regional models are used as forecasts for military operations. Civilian work. on the other hand, does not typically have real time constraints. The more common model for civilian use is a long-term, months to years, model. This is used for beach erosion studies, harbor breakwater design, etc.

Fleet Numerical is not able to run coastal and littoral models for all areas of the world due to limits on computer resources. Currently, about six regional models are run each day at resolutions greater than the global ocean model. These are chosen with current operational needs and potential threats in mind. They include areas such as the Persian Gulf, the Gulf of Mexico, the Caribbean, and the South China Sea.

It is possible to execute a regional model on fairly short notice for a new area. For example, the Taiwan Straits regional model was brought up fairly quickly during the Chinese naval missile exercises. However, better regional models can be produced when the model is run frequently. This allows the researchers to make adjustments in the model based on actual observations over time. Thus, the Persian Gulf model that is executed now is much better than the model that was initially brought up during the Persian Gulf Crisis [16].

Coastal wave and current models are dependent on a number of inputs:

The Spectral Wave Prediction System (SWAPS) uses nested components. The first component is the deep-water wave-action model. This provides the input to the shallow-water wave action model, for water 20m or less in depth. Finally, when needed, the harbor response model and/or the surf model are used to provide high resolution in these restricted areas. The wave action model Is limited by the accuracy of the atmospheric model [18]. This layered model is typical of the approach used in the regional models. 48 hour forecasts are the norm at Fleet Numerical, though, five day forecasts are possible. The limitation is the compute time available. Five day forecasts can be run when required.

While 3 km resolution is the goal for ocean models, this is only marginal for coastal work. A few years ago, it was thought that a 1 km resolution would be a reasonable target. Now, the goal is down to as low as 100 m. The accuracy desired is dependent on the nature of the water in the region. For example, a large, fresh water river flowing into a basin will cause a fresh-water plume. Both the flow of the fresh water and the differing density of fresh and salt water have to be handled correctly by the regional model [16].

An approach that combines the regional models into the global ocean model would be preferred. This would remove the coupling that is now required, and make the regional models easier to

89

implement. However. this will not be feasible for at least five more years. A more accurate global ocean model is needed first. Then, regional models can be folded into the global model as computing resources become available. One major difference in the deep ocean and shallow ocean models is the method of dividing up the vertical layers in the model. The deep ocean model uses six layers whose size is determined by the density of the water, not its depth. Over a continental shelf and on into shallow waters, a fixed-depth set of layers is used. Thus, both the method of determining the layer depth, and the number of layers, is different in the two models. Having both the global ocean model and the regional model in the same code would make the zoning in the vertical layers easier to solve than the present uncoupled approach.

The Navy is working on new ways of delivering regional forecasts to deployed forces. One approach is to continue to compute the regional forecasts at Fleet Numerical or at the Naval oceanographic office (NAVOCEANO), and send the results to those ships that need it. The other alternative is to place computing resources onboard ship to allow them to compute the regional forecast. The onboard ship solution has two principle drawbacks. First, the ship will need input from the global and regional atmospheric forecasts and the global ocean forecast to run the regional model. Sending this data to the ship may well exceed the bandwidth needed to send the completed regional forecast. Second, there would be a compatibility problem with deployed computers that were of various technology generations. Fleet Numerical is looking into options for allowing deployed forces greater flexibility in accessing the regional forecasts when they need it. Among the options are web-based tools for accessing the forecasts, and databases onboard ships that contain the local geographic details in a format such that the regional model can be easily displayed on top of the geographic data [16].

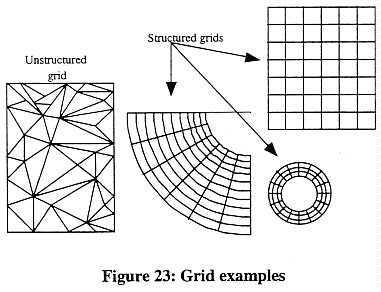

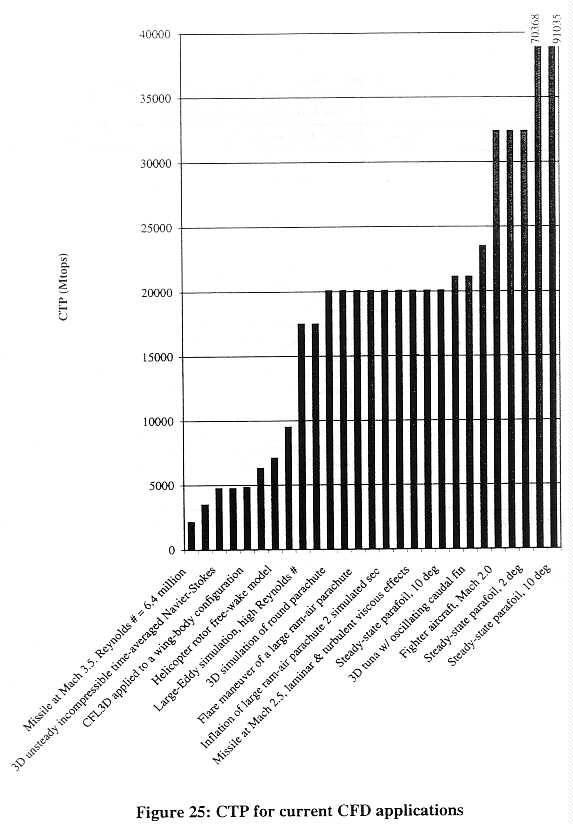

Weather Performance Summary

Figure 24 shows the CTP requirements for weather applications studied in this report. Table 13 shows a summary of the performance characteristics of the weather applications described in this report sorted first by the year and then by the CTP required. It should be noted that some applications appear more than once on the chart and table at different CTPs. This is particularly true of Fleet Numerical's global atmospheric forecast. As Fleet Numerical has upgraded its Cray C90 from 8 to 12 to (soon) 16 processors, it has been able to run a better forecast within the same time constraints. Thus, the increase in CTP does not give a longer-range forecast, but provides a 10-day forecast which has greater accuracy over the 10-day period.

90

91

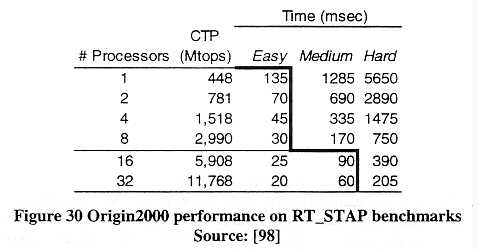

| Machine | Year | CTP | Time | Problem | Problem size |

| Cray C98 | 1994 | 10625 | -5 hrs | Global atmospheric forecast, Fleet Numerical operational run [19] |

480 x 240 grid; 18 vertical layers |

| Cray C90/1 | 1995 | 1437 | CCM2, Community Climate Model, T42 [9] | 128 x 64 transform grid, 4.2 Gflops |

|

| Cray T3D/64 | 1995 | 6332 | CCM2, Community Climate Model, T42 [9] | 128 x 64 transform grid, 608 Mflops |

|

| IBM SP-2/128 | 1995 | 14200 | PCCM2, Parallel CCM2, T42 [7] | 128 x 64 transform grid, 2.2 Gflops |

|

| IBM SP-2/160 | 1995 | 15796 | AGCM, Atmospheric General Circulation Model [12] |

144 x 88 grid points, 9 vertical levels, 2.2 Gflops |

|

| Cray T3D/256 | 1995 | 17503 | Global weather forecasting model, National Meteorological Center, T170 [11] |

32 vertical levels, 190 x 380 grid points, 6.1 Gflops |

|

| Cray C916 | 1995 | 21125 | CCM2, Community Climate Model, T170 [9] |

512 x 256 transform grid, 2.4 Gbytes memory, 53. Gflops |

|

| Cray C916 | 1995 | 21125 | IFS, Integrated Forecasting System, T213 [10] |

640 grid points/latitude, 134,028 points/horizontal layer, 31 vertical layers |

|

| Cray C916 | 1995 | 21125 | ARPS, Advanced Regional Prediction System, v 3.1 [5] |

64 x 64 x 32, 6 Gflops | |

| Paragon 1024 | 1995 | 24520 | PCCM2, Parallel CCM2, T42 [7] | 128 x 64 transform grid, 2.2 Gflops |

|

| Cray T3D/400 | 1995 | 25881 | IFS, Integrated Forecasting System, T213 [10] |

640 grid points/latitude, 134,028 points/horizontal layer, 31 vertical layers |

|

| Cray T3D/256 | 1996 | 17503 | 105 min | ARPS, Advanced Regional Prediction System, v 4.0, fine scale forecast [14] |

96 x 96 cells, 288 x 288 km, 7 hr forecast |

| Cray C916 | 1996 | 21125 | 45 min | ARPS, Advanced Regional Prediction System, v 4.0, coarse scale forecast [14] |

96 x 96 cells, 864 x 864 km, 7 hr forecast |

| Origin2000/32 | 1997 | 11768 | SC-MICOM, global ocean forecast, two-tier communication pattern [17] |

||

| Cray C912 | 1997 | 15875 | ~5 hrs | Global atmospheric forecast, Fleet Numerical operational run [19] |

480 x 240 grid; 24 vertical layers |

| Cray C912 | 1997 | 15875 | 1 hr | Global atmospheric forecast, Fleet Numerical operational run [16] |

1/4 degree, 25 km resolution |

| Cray T3E- 900/256 |

1997 | 91035 | 590 hrs | Global ocean model "hindcast" [16] | 1/16 degree, 7 km resolution |

| Cray C916 | 1998 | 21125 | ~5hrs | Global atmospheric forecast, Fleet Numerical operational run [19] |

480 x 240 grid; 30 vertical layers |

Table 13: Weather applications

92

The Changing Environment for Numerical Weather Forecasting

Future Forecasting Requirements

In the next five years, there are likely to be significant changes in the forecasting models used. and the types and frequency of the forecasts delivered. The following are some of the key anticipated changes:

Coupling of Oceanographic and Meteorological Models (COAMPS) and development of better littoral models. Currently, meteorological forecasts are made with minimal consideration of the changing conditions in the oceans. Oceans clearly have a strong impact on weather, but in the current operational environment, the computing resources do not permit a close coupling. The COAMPS models will be particularly important for theater, mesoscale, and battlespace forecasting which are likely to be focused on littoral areas in which the interplay of land, air, and ocean creates particularly complicated (and important) weather patterns. Current estimates are that such computations will require a Teraflop of sustained computational performance and 640 Gbytes of memory.

Shorter intervals between runs. Currently, the models used by Fleet Numerical are being run between one and eight times per day. In the future, customers will want hourly updates on forecasts. This requirement places demands on much more than the computational engine. The amount of time needed to run the model is just one component of the time needed to get the forecast in the hands of those in the field who will make decisions based on the forecast. At present, it takes several times longer to get the initial conditions data from the field and disseminate the final forecast than it does to run the model itself.

Greater resolution in the grids. In the next 5-6 years, Fleet Numerical practitioners anticipate that the resolution of the models used will improve by a factor of two, or more. Table 14 shows these changes. Some of the current customers view resolutions of less than 1 km as a future goal. Weather forecasting with this resolution is necessary for forecasting highly local conditions, such as the difference in weather between neighboring mountain peaks or valleys. Such forecasting is closely related to the next requirement of the future:

Emphasis on forecasting weather that can be sensed. As battlespace forecasting becomes practical, the ability to predict weather phenomena that will impact the movement of troops and equipment, placement of weapons, and overall visibility will be important sources of military advantage. Forecasts will be designed to predict not only natural weather phenomena such as fog, but also smoke, biological dispersion, oil dispersion, etc. These forecasts have obvious civilian uses as well.

Ensemble Forecast System. An Ensemble Forecast System (EFS) using NOGAPS has been included in the operational run at Fleet Numerical. The purpose of EFS is both to extend the useful range of numerical forecasts and to provide guidance regarding their reliability. The Fleet Numerical ensemble currently consists of eight members, each a ten-day forecast. A simple average of the eight ensemble members extends the skill of the medium range forecast by 12 to 24 hours. Of greater potential benefit to field forecasters are products that help delineate forecast

93

uncertainty. Another alternate method is to make simple average ensemble forecasts (SAEF) of several Weather Centers with different analyses and models. Both of these systems attempt to evaluate the effect of Chaos theory on weather forecasting by using many different events to determine the probability distributions of the weather in the future.

| Model Type | Grid Resolution: 1995 | Grid Resolution: 2001 (expected) |

| Global Analysis | Horizontal: 80 km Vertical: 15 levels |

Horizontal: 40 km Vertical: (as required) |

| Global Forecast | Horizontal: 80 km Vertical: 18 levels |

Horizontal: 50 km Vertical: 36 levels |

| Regional 9000 km x 9000 km |

Same as Global Forecast | Same as Global Forecast |

| Theater 7000 km x 7000 km |

Horizontal: 45 km Vertical: 36 levels |

Horizontal: 18 km Vertical: 50 levels |

| Mesoscale 3500 km x 3500 km |

Horizontal: 15 km Vertical: 36 levels

Horizontal: 20km |

Horizontal: 6 km Vertical: 50 levels |

| Battlespace 1000 km x 1000 km |

N/A | Horizontal: 2 km Vertical: 50 levels |

Table 14 Current and projected resolution of weather forecasting models

used by FNMOC.

Future Computing Platforms

Fleet Numerical is currently in a procurement cycle. Their future computing platforms are likely to include massively parallel systems. The migration from the current vector-pipelined machines to the distributed memory platforms is likely to require a huge effort by the Naval Research Labs and Fleet Numerical practitioners to convert not only their codes, but also their solutions and basic approaches to the problem. Gregory Pfister has the following comment about the difficulty of converting from a shared-memory environment to a distributed memory one:

This is not like the bad old days, when everybody wrote programs in assembler language and was locked into a particular manufacturer's instruction set and operating system. It's very much worse.It's not a matter of translating a program, algorithms intact, from one language or system to another; instead, the entire approach to the problem must often be

94

rethought from the requirements on up, including new algorithms. Traditional high-level languages and Open Systems standards do not help [20].

A great deal of effort continues to be made by researchers at the Naval Research Laboratories and elsewhere to adapt current codes to parallel platforms and develop new models less grounded in the world of vector-pipelined architectures. There is little doubt that parallel platforms will play increasingly central roles in numerical weather forecasting. What remains to be seen is how well current and future parallel architectures will perform relative to the vector-pipelined systems.

Currently, work is in progress on porting the NOGAPS global forecast model at Naval Research Laboratory - Monterrey for use as a benchmark in the procurement process. The code is being tested on several platforms including the T3E, SP-2 and Origin2000. The benchmark will be ready by spring 1998 for use in procurement decisions. There are problems with load balancing in the~parallel version. For example, the Sun is shining on half the planet (model) and not the other half. The half in sunshine requires more computation. Using smarter load balancing can solve this. One method employed is latitude ring interleaving to provide a balance of sunshine and dark latitude bands to one processor.

Microprocessor hardware is likely to continue its breathtaking rate of advance through the end of the century. The prevalence of systems based on commercial microprocessors is likely to result in steady improvements in the compilers and software development tools for these platforms. These developments are likely to increase the sustained performance as a percentage of peak performance of the massively parallel systems.

Computational Fluid Dynamics

Computational Fluid Dynamics (CFD) applications span a wide range of air and liquid fluid flows. Application areas studied for this report include aerodynamics for aircraft and parachutes, and submarine movement through the water. The basic CFD equations are the same for all these applications. The differences lie in specifics of the computation and include such factors as boundary conditions, fluid compressibility, and the stiffness or flexibility of solid components (wings, parafoils, engines, etc.) within the fluid. The resolution used in an application is dependent on the degree of turbulence present in the fluid flow, size of the object(s) within the fluid, time scale of the simulation and the computational resources available.

We begin with a description of CFD in aerodynamics, specifically airplane design. Much of the general description of CFD applies to all the application areas; thus, subsequent sections describe only the differences from the aerodynamic section.

CFD in Aerodynamics

The Navier-Stokes (N-S) equations are a set of partial differential equations that govern aerodynamic flow fields. Closed-form solutions of these equations have only been obtained for a few simple cases due to their complex form.

Numerical techniques promise solutions to the N-S equations; however, the computer resources necessary still exceed those available. Current computing systems do not provide sufficient

95

power to resolve the wide range of length scales active in high-Reynolds-number turbulent flows.14 Thus, all simulations involve solution of approximations to the Navier-Stokes equations [2 l]. The ability to resolve both small and large turbulence in the fluid flow separates the simple solutions from the complex solutions.

Solution techniques

Ballhaus identifies four stages of approximation in order of evolution and complexity leading to a full Navier-Stokes solution [21]:

| Stage | Approximation | Capability | Grid | Compute factor |

| I | linearized inviscid | subsonic/supersonic pressure loads vortex drag |

3 x 103 | 1 |

| II | nonlinear inviscid | Above plus: transonic pressure loads wave drags |

105 | 10 |

| III | Reynolds averaged Navier-Stokes |

Above plus: separation/reattachment stall/buffet/flutter total drag |

107 | 300 |

| IV | large eddy simulation |

Above plus: turbulence structure aerodynamic noise |

109 | 30,000 |

| full Navier-Stokes | Above plus: laminar/turbulent transition turbulence dissipation |

1012 to 1015 | 3 x 107 to 3 x 1010 |

Table 15: Approximation states in CFD solutions

There are 60 partial-derivative terms in the full Navier-Stokes equations when expressed in three dimensions. Stage I uses only three terms, all linear. This allows computations across complex geometries to be treated fairly simply.

Subsequent stages use progressively more of the N-S equations. Finite-difference approximations are used and require that the entire flow field be divided into a large number of small grid cells. In general, the number of grid cells increases with the size of the objects in the flow field, and with the detail needed to simulate such features as turbulence.

In aircraft design, the stage I approach can supply data about an aircraft in steady flight at either subsonic or supersonic speeds. Stage II allows study of an aircraft changing speeds, particularly crossing the sound barrier. It also supports studies of maneuvering aircraft, provided the

_______________________

14 The Reynolds number provides a quantitative measure of the degree of turbulence in the model. The higher the Reynolds number, the more accurately turbulence can be represented by the model.

96

maneuvers are not too extreme. Thus, an aircraft making a normal turn, climb, and/or descent can be modeled at this stage. A jet fighter making extreme maneuvers, however, requires the addition of the viscous terms of the N-S equations. Stage III can provide this level. It uses all the N-S equations, but in a time-averaged approach. The time-averaged turbulent transport requires a suitable model for turbulence, and no one model is appropriate for all applications. Thus, this is an area of continuing research.

Simulating viscous flow complete with turbulence requires another large step up the computational ladder. Hatay et al [22] report the development of a production CFD code that does direct numerical simulation (DNS) that includes turbulent flow. DNS is used to develop and evaluate turbulence models used with lower stage computations. The code is fully explicit; each cell requires information only from neighboring cells, reducing the communication costs. Hatay et al give results for both NEC SX4 and Cray C90 parallel machines. The code has also been ported to the T3D/E and SP-2 MPP platforms. The code illustrates some of the difficulties with moving CFD applications to parallel platforms. Parallel platforms offer the promise of more memory capacity, necessary to solve usefully large problems. The first task was to run the code on a single processor, but make use of all the memory available on the machine. Then, considerable effort is required to get the communication/computation ratio low enough to achieve useful speedup over more than a few processors. Finally, the code was ported to the distributed memory platforms.

Aircraft design

Building on the Basics [1] established that aircraft design, even for military aircraft, generally falls at or below the threshold of uncontrollability. This has not changed since the 1995 study. Two things have occurred in the past two years, both continuations of trends that stretch back to the early 1990's. First, aircraft design is moving to what can be termed "whole plane" simulations. This refers to the three-dimensional simulation of an entire aircraft, including all control surfaces, engine inlets/outlets, and even embedded simulations of the engines. Within engine development, this same principle is evident in moves to develop high fidelity simulations of each engine component and combine them into a single run. While such whole plane and whole engine simulations are still in development, significant progress has been made.

Second, aircraft codes are moving to parallel platforms from vector platforms. This transition has lagged behind what has been done in other application areas. This is largely due to the poor scalability of the applications used in aerodynamics, and to the memory bandwidth and memory size requirements of the applications. A secondary consideration is the need to validate a code before industry is willing to use it.

Whole plane and whole engine designs

Historically, aircraft designers have used numerical simulation to supplement wind tunnel testing. The numerical simulations support more efficient use of expensive wind tunnel time. In turn, this combination has enabled designers to reduce the number of prototypes that need to be built and tested when designing new airframes and engines. This trend continues today. The main

97